“Quality means doing it right when no one is looking.” — Henry Ford

In the high-stakes world of automotive manufacturing, precision is everything. A single undetected flaw on the assembly line can lead to product recalls, regulatory issues, or damaged brand reputation. Yet, many factories still depend on manual inspection or end-of-line testing often too late to prevent the problem.

Today, more manufacturers are turning to AI-powered in-line quality control, where defects are detected and flagged in real time, during production. This article explains how Scanflow’s Quality Control solution enables real-time defect detection and how it’s transforming production lines across the automotive industry.

The Problem with Traditional Quality Control

Historically, automotive plants have depended on end-of-line inspection, manual visual checks, and random sampling. These methods catch problems only after the part is built, are prone to inconsistency and fatigue, and may completely miss intermittent defects. This reactive approach results in increased rework and waste, delayed issue detection, and the risk of customer-facing failures. A study by McKinsey estimates that up to 70% of defects in manufacturing go unnoticed until late in the process — often when it’s too costly to fix.

What is In-Line AI-Based Quality Control?

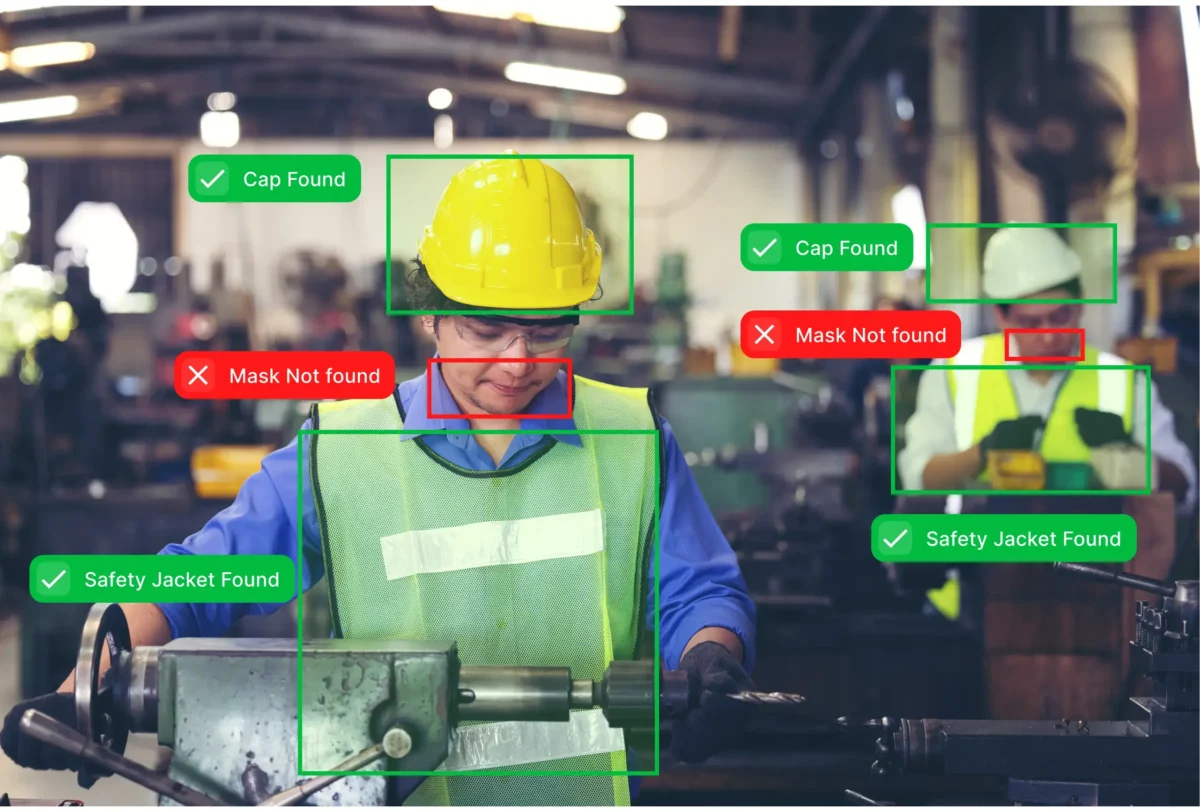

In-line quality control refers to the practice of inspecting components as they move through the production line. With AI and computer vision, this inspection is automated, fast, and highly accurate — operating without disrupting production speed. These systems can scan parts for flaws, analyze images in milliseconds, and alert operators when a defect is found. This proactive model helps manufacturers contain quality issues early and reduce defect-related costs dramatically.

How Scanflow’s AI QC Solution Works

Scanflow deploys both fixed and mobile inspection systems powered by AI and computer vision, trained using thousands of annotated images from specific parts and components. Cameras are installed at key points across the production line, capturing images of components as they pass through. AI algorithms detect abnormalities like cracks, burrs, deformation, or foreign particles. Real-time alerts are pushed to dashboards or operator screens, and all inspection data is logged for traceability and process improvement.

Key Benefits of Real-Time In-Line Quality Control

AI systems enable continuous inspection of 100% of production output, ensuring no part goes unchecked. Defects are caught as soon as they occur, allowing immediate intervention and preventing process drift. These systems deliver consistent performance 24/7 without fatigue or distraction. Every inspection is logged and visualized, offering insights that improve upstream processes. Early detection also reduces rework costs, scrap, and downtime.

Types of In-Line Inspections Enabled by AI

Visual surface inspection is ideal for identifying cracks, scratches, and contamination on metal casings, painted parts, or injection-molded components. Dimensional accuracy checks help verify hole positions, gaps, and alignments on complex assemblies like gear housings or dashboards. Assembly verification ensures the presence and proper installation of fasteners, connectors, labels, and seals. Anomaly detection allows the system to recognize unknown or rare flaws by understanding what normal looks like, adapting to process drift over time. This inspection model provides the flexibility to scale across different component types without building isolated systems.

Fast, Scalable Implementation

Scanflow offers rapid deployment with pre-trained models and can be tailored to specific parts and processes. It integrates easily with MES, ERP, and dashboard systems. With minimal hardware and a powerful SDK, manufacturers can go live in under 30 days and start detecting defects from day one.

“You can’t improve what you don’t measure.” — Peter Drucker

With Scanflow, you don’t just measure quality you act on it instantly.

Why Real-Time In-Line QC is the Future

As the automotive sector moves toward smart factories, traditional methods are giving way to agile, AI-driven systems. Manufacturers now understand that quality assurance works best when it’s embedded directly into the line. If you’re still relying on end-of-line inspections or random sampling, it’s time to modernize. In-line AI QC helps avoid rework, meet OEM compliance, and improve overall production efficiency.

Ready to Detect Defects Before They Become a Problem?

Start with the right technology:

Explore Scanflow’s Quality Control Solution

See how it works in automotive manufacturing

Connect with our team for a tailored walkthrough of your plant needs.